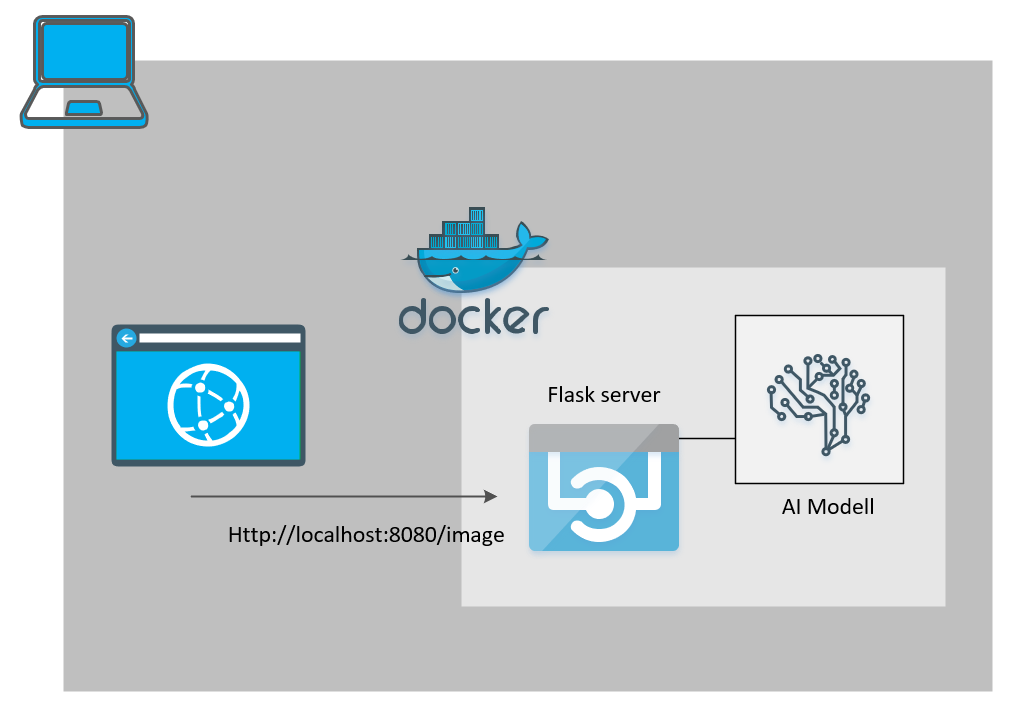

How to: Send images from Azure Logic Apps to a Custom API – as Multipart From-Data

[Reading Time: 5 minutes]Why send images to an API?

For a small private project, I wanted to trigger a Logic App for generated images in Azure Blobstorage, which would then display them to teams with AdaptiveCards.

The problem: some … Read the rest